- import: 필요한 모듈 import

- 전처리: 학습에 필요한 데이터 전처리를 수행합니다.

- 모델링(model): 모델을 정의합니다.

- 컴파일(compile): 모델을 생성합니다.

- 학습 (fit): 모델을 학습시킵니다.

이미지: 데이터 로드

1) load_data()를 통해 train, validation 나눈다.

(x_train, y_train), (x_valid, y_valid) = fashion_mnist.load_data()

전처리

1) 이미지 정규화 (Normalization)- 모든 픽셀은 0~255(8bit)

x_train = x_train / 255.0

x_valid = x_valid / 255.0

3) Flatten- 2D> 1D

x = Flatten(input_shape=(28, 28)) #x_train의 shape 찍어보고, 28*28-> 784

print(x(x_train).shape)4) Dense Layer- 맨 마지막 출력층 활성함수: 'softmax', 단순 분류: 'sigmoid'

Dense(10, activation='softmax')

모델 정의 (Sequential)

1) Sequential 모델 안에서 층을 쌓는다

- 2D > 1D

- Dense Layer에 activation='relu'

- 마지막 층의 출력 숫자는 분류하고자 하는 클래스 갯수

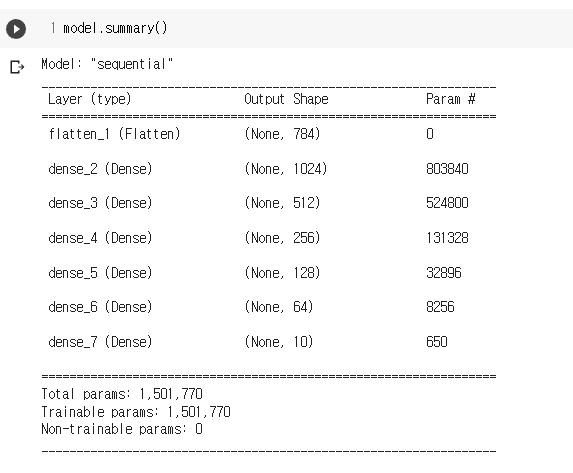

model = Sequential([

# Flatten으로 shape 펼치기

Flatten(input_shape=(28, 28)), # 28 * 28 -> 784의 평평한 vecter

# Dense Layer

Dense(1024, activation='relu'),

Dense(512, activation='relu'),

Dense(256, activation='relu'),

Dense(128, activation='relu'),

Dense(64, activation='relu'),

# Classification을 위한 Softmax

Dense(10, activation='softmax'),

])파라미터 줄어드는 모습 확인

컴파일 (compile)

- optimizer: 'adam' ,

- loss:

- (activation)sigmoid: binary_crossentropy

- (activation)softmax:

- 원핫인코딩(O): categorical_crossentropy

- 원핫인코딩(X): sparse_categorical_crossentropy

- metrics: 'acc' 혹은 'accuracy'

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['acc'])

학습 (fit)

ModelCheckpoint: 체크포인트 생성: val_loss 기준으로 epoch 마다 최적의 모델을 저장하기 위해

- checkpoint_path

- ModelCheckpoint

checkpoint_path = "my_checkpoint.ckpt"

checkpoint = ModelCheckpoint(filepath=checkpoint_path,

save_weights_only=True,

save_best_only=True,

monitor='val_loss',

verbose=1)학습

history = model.fit(x_train, y_train,

validation_data=(x_valid, y_valid),

epochs=20,

callbacks=[checkpoint],

)

학습 완료 후 Load Weights (ModelCheckpoint)

# checkpoint 를 저장한 파일명을 입력합니다.

model.load_weights(checkpoint_path)

학습한 후 검증하고 싶다면?

model.evaluate(x_valid, y_valid)

학습 loss / acc 시각화

plt.figure(figsize=(12, 9))

plt.plot(np.arange(1, 21), history.history['loss'])

plt.plot(np.arange(1, 21), history.history['val_loss'])

plt.title('Loss / Val Loss', fontsize=20)

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend(['loss', 'val_loss'], fontsize=15)

plt.show()

plt.figure(figsize=(12, 9))

plt.plot(np.arange(1, 21), history.history['acc'])

plt.plot(np.arange(1, 21), history.history['val_acc'])

plt.title('Acc / Val Acc', fontsize=20)

plt.xlabel('Epochs')

plt.ylabel('Acc')

plt.legend(['acc', 'val_acc'], fontsize=15)

plt.show()

수치: 데이터 로드

1) tensorflow-datasets (tfds): train, validation 나눈다.

train_dataset = tfds.load('iris', split='train[:80%]')

valid_dataset = tfds.load('iris', split='train[80%:]')

#valid_dataset = tfds.load('iris', split='train[-20%:]')전처리

- label 값을 one-hot encoding 할 것

- feature (x), label (y)를 분할할 것

def preprocess(data):

x = data['features']

y = data['label']

y = tf.one_hot(y, 3)

return x, y

모델 정의 (Sequential)

model = tf.keras.models.Sequential([

# input_shape는 X의 feature 갯수가 4개 이므로 (4, )로 지정합니다.

Dense(512, activation='relu', input_shape=(4,)), #들어오는 데이터가 2D가 아니라 flatten 해줄 필요가 없음, 정형데이터(1D)임

Dense(256, activation='relu'),

Dense(128, activation='relu'),

Dense(64, activation='relu'),

Dense(32, activation='relu'),

# Classification을 위한 Softmax, 클래스 갯수 = 3개

Dense(3, activation='softmax'),

])

컴파일 (compile)부터는 이미지 데이터 과정과 동일

728x90

'📁 AI & Bigdata > AI & ML & DL' 카테고리의 다른 글

| [ML] GBM이란 (0) | 2022.08.29 |

|---|---|

| [AI] XAI, eXplanable AI이란 (0) | 2022.08.29 |

| [DL] Faster R-CNN 네트워크 세부 구성 (0) | 2022.08.18 |

| [DL] CNN-Initializing Weights for the Convolutional and FC Layers (0) | 2022.08.18 |

| [DL] CNN 분류 성능 높이기/ 데이터 증가, Mix Image (0) | 2022.08.09 |